Master Linear Algebra, with clear and concise explanations, Exercises and Practical Examples in various domains.

What you will learn

Understanding Matrix Algebra and applying it in solving linear equations and transformations, with practical examples in Python.

Mastering vectors, vector properties, vector spaces, sub spaces and application in coordinate systems. Fundamental sub spaces and how they can be computed.

Mastery of Orthogonal and Orthonormal vectors and orthogonal projections. Then computing minimal distances & Gram Schmidt orthogonalization.

Matrix Decompositions like eigen, cholesky and singular value decompositions. Mastery of Diagonalization, full rank approximation and low rank approximation.

Matrix inverses, least square and normal equation. Linear Regression and Kaggle House Prediction Practice.

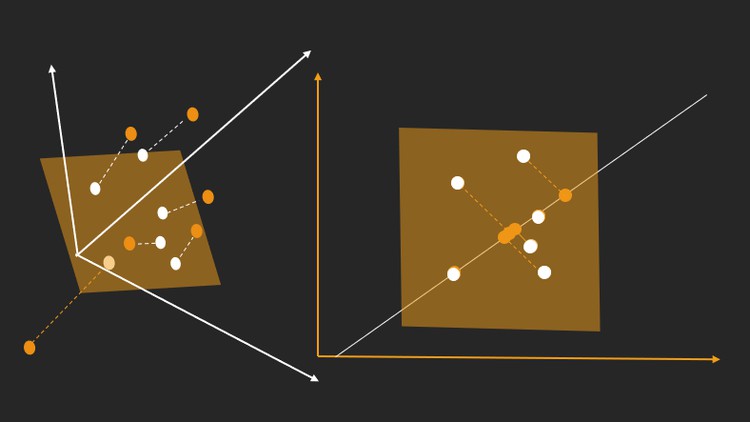

Explaining and deducing Principal Component Analysis (PCA) from scratch and applying it to face recognition using the Eigen Faces algorithm.

Description

In this course, we look at core Linear Algebra concepts and how it can be used in solving real world problems. We shall go through core Linear Algebra topics like Matrices, Vectors and Vector Spaces. If you are interested in learning the mathematical concepts in linear algebra, but also want to apply those concepts to datascience, statistics, finance, engineering, etc.then this course is for you! We shall explain detaily all Maths Concepts and also implement them programmaticaly in Python. We lay much emphasis on feedback. Feel free to ask as many questions as possible!!! Let’s make this course as interactive as possible, so that we still gain that classroom experience.

Here are the different concepts you’ll master after completing this course.

- Fundamentals of Linear Algebra

- Operations on a single Matrix

- Operations on two or more Matrices

- Performing Elementary row operations

- Finding Matrix Inverse

- Gaussian Elimination Method

- Vectors and Vector Spaces

- Fundamental Subspaces

- Matrix Decompositions

- Matrix Determinant and the trace operator

- Core Linear Algebra concepts used in Machine Learning and Datascience

- Hands on experience with applying Linear Algebra concepts using the computer with the Python Programming Language

- Apply Linear Algebra in real world problems

- Skills needed to pass any Linear Algebra exam

- Principal Component Analysis

- Linear Regression

YOU’LL ALSO GET:

- Lifetime access to This Course

- Friendly and Prompt support in the Q&A section

- Udemy Certificate of Completion available for download

- 30-day money back guarantee

Who this course is for:

- Computer Vision practitioners who want to learn how state of art computer vision models are built and trained using deep learning.

- Anyone who wants to master deep learning fundamentals and also practice deep learning using best practices in TensorFlow.

- Deep Learning Practitioners who want gain a mastery of how things work under the hood.

- Beginner Python Developers curious about Deep Learning.

Enjoy!!!