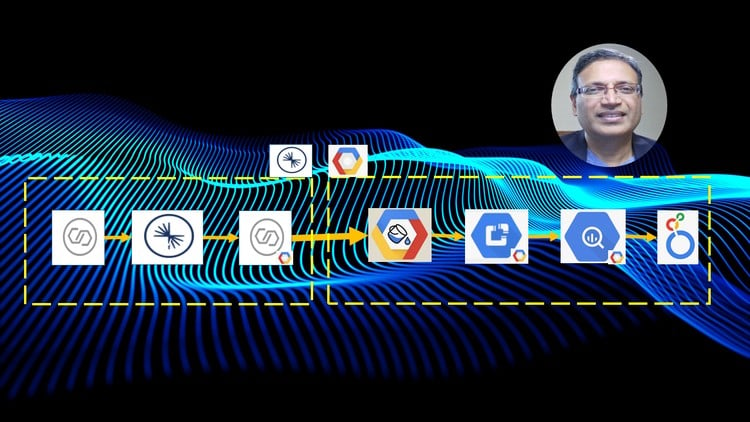

Hands on course – Real Time Data Streaming Pipeline using Confluent Kafka, GCP & Looker from scratch

⏱️ Length: 2.0 total hours

👥 37 students

🔄 January 2026 update

Add-On Information:

Note➛ Make sure your 𝐔𝐝𝐞𝐦𝐲 cart has only this course you're going to enroll it now, Remove all other courses from the 𝐔𝐝𝐞𝐦𝐲 cart before Enrolling!

-

Course Overview

- Embark on a practical, project-based journey to master the creation of real-time data streaming pipelines using Confluent Kafka and Google Cloud Platform (GCP). This course is designed to guide you through the entire lifecycle of streaming data from the ground up.

- Learn to build a robust and scalable data infrastructure that effectively handles high-velocity data streams, making your applications and analytical processes truly real-time.

- Discover how to seamlessly integrate Kafka’s distributed messaging capabilities with various GCP services to construct a resilient, cloud-native data ecosystem.

- The curriculum emphasizes hands-on implementation, culminating in the deployment of a fully functional data pipeline, from raw data ingestion to insightful visualization.

- Gain proficiency in connecting your processed data to Looker, enabling you to design and generate dynamic dashboards and reports for immediate business intelligence.

- Understand the critical role of real-time data in modern digital environments, equipping you with the skills to architect solutions for instantaneous operational insights and competitive advantage.

- Acquire foundational knowledge in designing fault-tolerant, high-performance streaming architectures that efficiently manage large volumes of data with minimal latency and high reliability.

-

Requirements / Prerequisites

- A foundational understanding of basic data concepts, including what constitutes data, common database structures, and the importance of data flow within an organization.

- Familiarity with operating a command-line interface (CLI) in either a Linux, macOS, or Windows environment, as many interactions with cloud services and Kafka utilities are performed via commands.

- An active Google Cloud Platform (GCP) account is essential, enabling you to provision and utilize various cloud services; the course is designed to be compatible with GCP’s free tier.

- A stable internet connection and a modern web browser are necessary for accessing course materials, interacting with the GCP console, and utilizing cloud-based development environments.

- No prior experience with Confluent Kafka or Google Cloud is explicitly required, as the course adopts a “from scratch” approach, making it ideal for motivated beginners.

- A genuine enthusiasm for learning about real-time data processing, distributed systems, and cloud technologies will significantly enhance your learning experience and retention.

- Basic comprehension of general programming concepts will be beneficial for grasping data transformation logic, even though the course emphasizes configuration and integration over extensive coding.

-

Skills Covered / Tools Used

- Confluent Kafka Fundamentals: Gain a deep understanding of Kafka’s core architecture, including the roles of producers, consumers, topics, partitions, brokers, and the underlying distributed log principles.

- Kafka Connect: Learn to implement and manage Kafka Connect for scalable, reliable data integration, deploying source connectors to ingest data and sink connectors to export processed data to various destinations.

- Google Cloud Platform (GCP) Services:

- Compute Engine: Provision and manage virtual machines (VMs) for deploying and configuring Kafka brokers, ensuring a flexible and robust environment for your streaming platform.

- BigQuery: Utilize Google’s fully managed, serverless data warehouse for cost-effective storage and lightning-fast analytical querying of your processed streaming data.

- Cloud Storage: Employ robust object storage buckets for staging intermediate data, archiving logs, and serving as a versatile landing zone for various pipeline stages.

- Identity and Access Management (IAM): Implement robust security measures by configuring appropriate roles and permissions for users and services accessing your GCP resources.

- Virtual Private Cloud (VPC) Networking: Design and configure secure and isolated network environments for your GCP resources, controlling traffic flow and enhancing overall security posture.

- Real-time Data Processing: Develop practical skills in designing and implementing logic for real-time ingestion, transformation, filtering, and aggregation of high-velocity streaming events.

- Looker Dashboarding & Visualization: Master the basics of connecting Looker to your processed data in BigQuery, enabling the creation of interactive dashboards, reports, and data visualizations for business intelligence.

- Streaming Pipeline Architecture Design: Acquire the ability to design resilient, scalable, and fault-tolerant streaming data architectures tailored to specific business requirements, considering various data sources and sinks.

- Basic Monitoring & Troubleshooting: Understand fundamental strategies for observing the health and performance of your Kafka cluster and GCP resources, identifying and resolving common issues within your data pipeline.

- Command-Line Tooling: Become proficient with essential command-line utilities for managing Kafka clusters and interacting with GCP services, enhancing your operational efficiency.

-

Benefits / Outcomes

- Upon completion, you will possess the practical expertise to confidently design, build, and deploy end-to-end real-time data streaming pipelines from scratch.

- Achieve a strong foundational understanding and working proficiency in Confluent Kafka, enabling you to articulate its core concepts and implement common distributed messaging patterns.

- Gain hands-on expertise with essential Google Cloud Platform services critical for data engineering workflows, including compute, storage, data warehousing (BigQuery), and network security (IAM).

- Develop the ability to integrate diverse data sources and destinations into a unified streaming architecture, solving complex data integration challenges in real-world scenarios.

- Acquire valuable data visualization skills by utilizing Looker, transforming raw streaming data into intuitive and interactive dashboards that provide immediate business insights.

- Significantly enhance your resume and career prospects in highly sought-after roles such as Data Engineer, Cloud Engineer, Streaming Architect, or Business Intelligence Developer.

- Cultivate an architectural mindset for building scalable, resilient, and maintainable streaming systems, understanding the trade-offs and best practices for production environments.

- Be empowered to contribute to projects requiring real-time analytics, event-driven applications, and immediate operational intelligence, becoming a key asset in any organization’s data strategy.

- Build a tangible project that can serve as a strong portfolio piece, showcasing your ability to implement a complete streaming data pipeline using industry-standard tools and cloud infrastructure.

-

PROS

- Hands-on, Project-Based Learning: Provides practical experience in building a complete, functional streaming pipeline, solidifying theoretical knowledge.

- Industry-Relevant Technologies: Focuses on highly demanded tools like Confluent Kafka, Google Cloud Platform, and Looker, boosting career readiness.

- Beginner-Friendly Approach: Structured to teach “from scratch,” making complex topics accessible to learners with limited prior experience in streaming or cloud.

- End-to-End Pipeline Coverage: Teaches comprehensive data flow, from initial ingestion and processing to storage and advanced visualization, ensuring a holistic understanding.

- Real-Time Data Expertise: Develops crucial skills in processing and analyzing data instantaneously, essential for modern analytics, IoT, and operational intelligence.

- Concise and Efficient Duration: At 2.0 hours, it offers a focused and time-efficient introduction or quick upskilling opportunity without a lengthy commitment.

- Current Content: The January 2026 update ensures the course material and tools reflect the latest industry practices and software versions.

- Valuable Looker Integration: Adds a powerful data visualization and business intelligence tool to your skillset, enabling effective data presentation.

- Scalable Architecture Principles: Imparts knowledge on designing pipelines that can expand with growing data volumes and evolving business needs.

- Direct Career Impact: Equips learners with in-demand skills directly applicable to roles in data engineering and cloud computing.

-

CONS

- Limited Depth Due to Duration: Given its 2.0-hour format, the course primarily covers foundational concepts and basic implementations; in-depth mastery of advanced Kafka features, complex GCP integrations, or large-scale production-grade optimizations will necessitate further, dedicated study and practical application beyond this initial introduction.

Learning Tracks: English,Development,Data Science

Found It Free? Share It Fast!